Scrape Google News for Data-driven Success

Master the art of web scraping with our concise guide on how to scrape Google News for valuable insights. Learn the simple steps to unlock a wealth of information for research or business purposes.

In today's data-driven world, the internet is like a treasure chest full of precious information, and web scraping is like a magic key to unlock all that digital treasure. Learning how to scrape Google News can be a game changer for you, whether you're doing research or you're a business person looking for insights.

In this blog post, we'll learn how to scrape Google News to get useful data. Whether you want to keep an eye on your brand, stay updated with current events, do market research, or check out your competition, web scraping is the tool that will help you find the information you need.

Step 1: Library Installation.

Let's kick things off by installing the necessary libraries. To achieve this, you'll need to input specific commands in your command prompt.

To install these libraries, execute the following commands:

pip install request

pip install pandasStep 2: Configuring Network Requests through SERP API

Now, it's time to set up network requests using the SERP API. This involves preparing the payload and entering your credentials to initiate the API requests. For a smooth rendering of JavaScript, ensure the rendering mode is set to 'html.' This setting instructs the SERP API to handle JavaScript rendering effectively.

Furthermore, set the source as 'google' and specify the target URL as 'url.' Don't forget to replace API_KEY with your sub-account API Key.

https://github.com/serply-inc/examples/blob/master/python/search_google_news.py#L5

Next, utilize the post() method from the request module to post the payload and credentials to the API. If everything is functioning perfectly, you'll receive a code status of "200," indicating successful operation.

Step 3: Inspecting the elements.

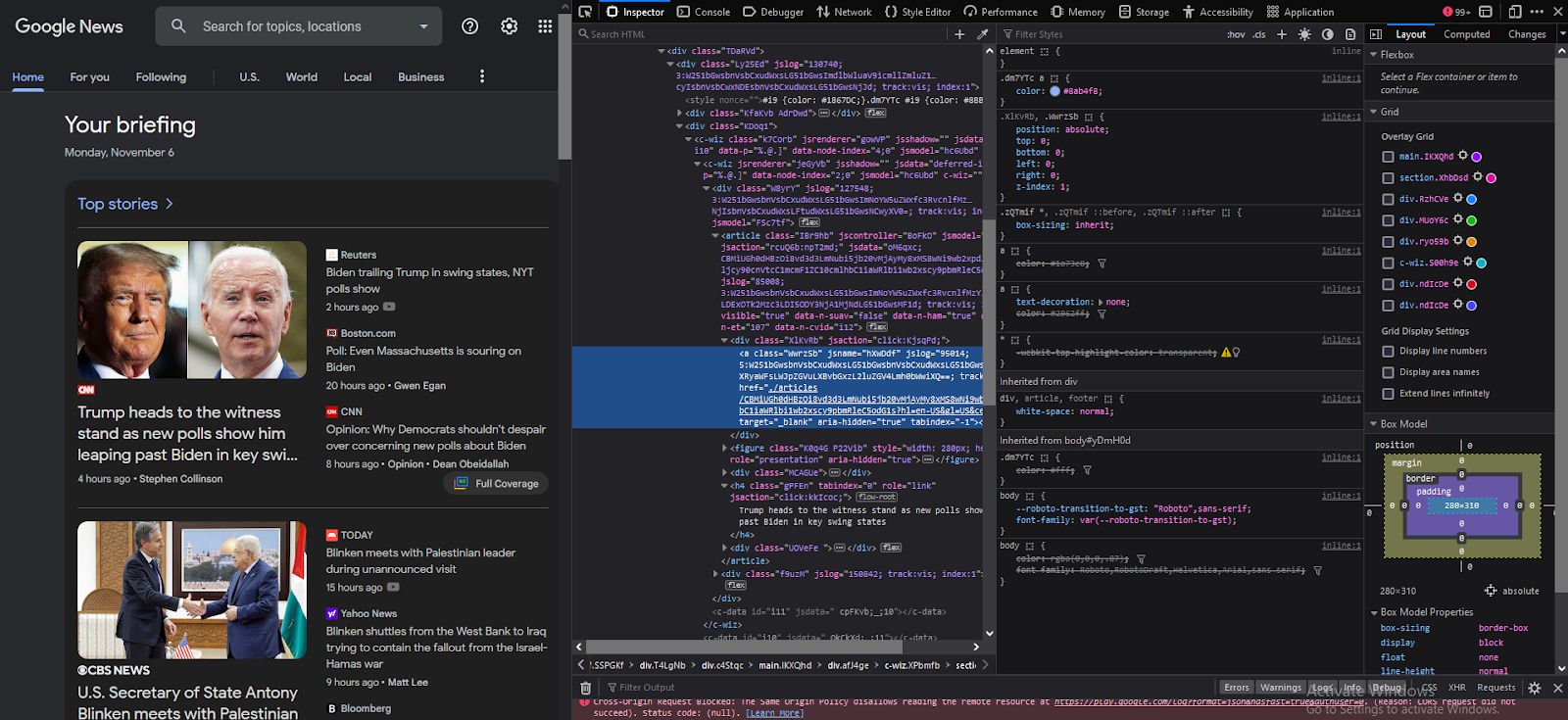

Before you start extracting the news headlines, you need to find the specific HTML elements by using a web browser. Begin by opening the Google News homepage in your web browser and right-click on the page. Alternatively, you can press CTRL + SHIFT + I to access the developer tools, which will appear as depicted below:

Step 4: Getting the Data

API_KEY = os.environ.get("API_KEY")

# set the api key in headers

headers = {"apikey": API_KEY}

# format the query

# q: the search term

# hl: return results in English

query = {"q": "global markets"}

# build to url to make request

url = f"https://api.serply.io/v1/news/" + urllib.parse.urlencode(query)

resp = requests.get(url, headers=headers)

results = resp.json()https://github.com/serply-inc/examples/blob/master/python/search_google_news.py#L5

How to perform the query

API_KEY = os.environ.get("API_KEY")

# set the api key in headers

headers = {"apikey": API_KEY}

# format the query

# q: the search term

# hl: return results in English

query = {"q": "global markets"}

# build to url to make request

url = f"https://api.serply.io/v1/news/" + urllib.parse.urlencode(query)

resp = requests.get(url, headers=headers)

results = resp.json()

Now, let's dig in a bit deeper. We've noticed that all the important news headlines are tucked away inside the <h4> tag.

To capture this data, we'll use the Beautiful Soup module we brought in earlier. We'll create a list called 'data' to gather and keep all these headlines.

Step 6: Exporting the data to a CSV

Next, save the information into a data frame, and after that, you can export it to a CSV file with the 'to_csv()' method. To prevent the CSV file from having an additional index column, you can set the index to 'False'.

import csv

f = csv.writer(open("news.csv", "w", newline=''))

# Write CSV Header, If you dont need that, remove this line

f.writerow(["title", "link", "summary", "source_title", "source_href"])

for entry in results["entries"]:

f.writerow([

entry['title'],

entry['link'],

entry['summary'],

entry['source']["title"],

entry['source']["href"],

])Exporting google news to csv

import csv

f = csv.writer(open("news.csv", "w", newline=''))

# Write CSV Header, If you dont need that, remove this line

f.writerow(["title", "link", "summary", "source_title", "source_href"])

for entry in results["entries"]:

f.writerow([

entry['title'],

entry['link'],

entry['summary'],

entry['source']["title"],

entry['source']["href"],

])Conclusion.

And there you have it! You've learned the basics of scraping Google News. This skill can be a game-changer for research or business insights. With web scraping, you're ready to dive into a world of valuable data. Happy scraping!